What will glasses of the future look like? Many people must have imagined it at some point.

About the same size as current glasses, they could transform into a mobile HUD while navigating. During video calls, a holographic image of the other person would float in the air. The lenses would display everything you need… Basically, they’d be something like the glasses worn by Vegeta or Iron Man.

On Sept. 25, Meta introduced its first AR smart glasses, Orion, bringing us one step closer to this ideal product. While AR glasses have been released over the past few years, they all shared some obvious flaws: they either had limited functionality, essentially functioning as portable projectors, or were bulky and prohibitively expensive, making them unsuitable for the mass market.

Compared to previous products, Meta Orion’s sleek design and integrated AR features rekindle hope for AR glasses becoming mainstream. Plus, as Meta Connect 2024 marks the 10th anniversary of the event, Mark Zuckerberg unveiled several other new products:

- Meta Orion smart glasses: Industry-leading AR experience;

- Meta Quest 3S headset MR glasses: The “lite version” of Quest 3, with a lower price but no groundbreaking features;

- Continued upgrades to Meta AI to enhance the user experience of the headsets;

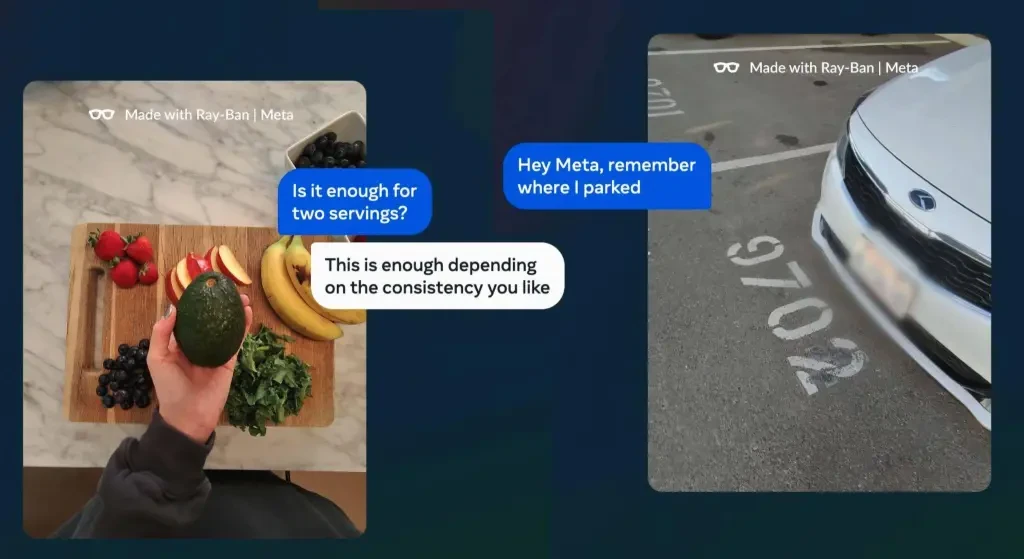

- New AI features for Meta Ray-Ban: Real-time translation, personalized style suggestions, and even help finding your car;

- Meta’s first open-source large language model (LLM), Llama 3.2, with multimodal capabilities.

AI combined with hardware is the hot topic in consumer electronics this year. But how exactly does Meta integrate its powerful AI models with its new hardware? And what do the highly anticipated Meta Orion smart glasses look like? Let’s take a closer look.

Meta Orion AR Glasses: The Stunning Debut of a Decade-Long Project

In Zuckerberg’s vision, glasses should be the ultimate futuristic device. Prior to this, Meta had already ventured into the smart glasses market with the Ray-Ban Meta collaboration, which saw significant success in gauging consumer interest in smart eyewear.

However, Ray-Ban Meta only accomplished half of Meta’s ultimate goal. The product primarily offered audio, photography, and some basic AI features—it was still essentially a traditional wearable device.

The complete version, which Zuckerberg called “flawless,” is the Meta Orion AR glasses. This top-secret project has been in development for over a decade, with billions of dollars invested. Today, the world finally gets to see it.

The first impression of the Meta Orion glasses is its sleek design, which closely resembles regular sunglasses. This instantly sets it apart from the bulkier competitors on the market—after all, glasses should look good too.

Meta achieved this by opting not to cram all components into a single frame. Instead, the Meta Orion consists of three separate parts: the glasses themselves, a hand-tracking wristband, and a “compute module” about the size of a remote control. These three components are wirelessly connected.

This modular design, combined with the use of lightweight magnesium materials, allows the Orion glasses to weigh an astonishingly light 98 grams. For comparison, Snap’s new AR glasses, Spectacles, released on Sept. 17, weigh a hefty 226 grams.

Even more impressive, the lightweight Orion offers a battery life of around two hours, whereas the bulkier Spectacles only manage a brief 45 minutes.

As for the core AR projection capabilities, Orion is “well ahead of the competition” in several key areas. Instead of a traditional glass display, Orion uses silicon carbide lenses. The micro projectors embedded in the frame emit light into the waveguide, which is then projected onto the lenses, creating AR visuals of varying depth and size. Zuckerberg refers to this as a “panoramic view.”

According to testing by The Verge, Meta Orion’s field of view reaches an impressive 70 degrees, making it possibly the widest in the industry for AR glasses.

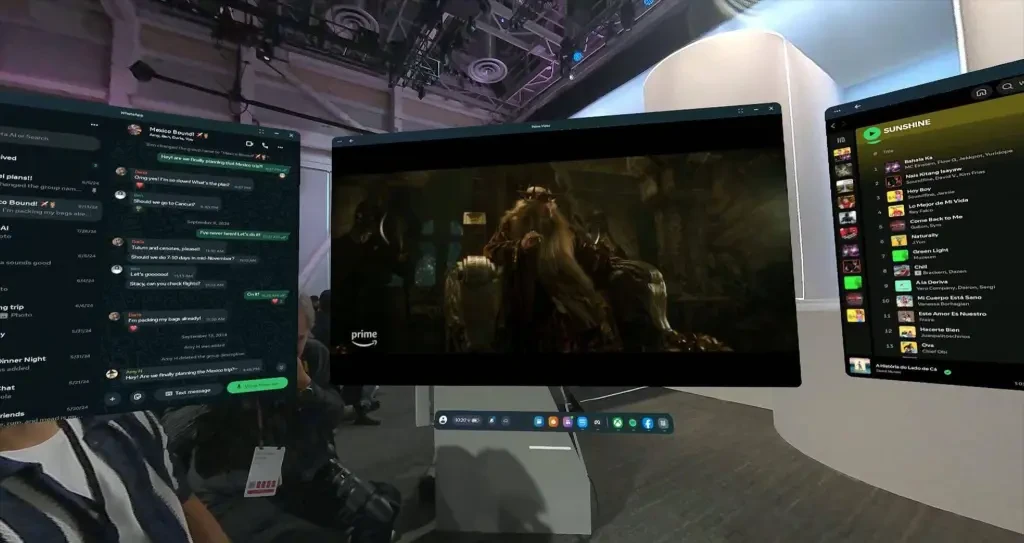

In the demo videos available, users can wear the glasses to open multiple windows of Meta Horizon Apps for multitasking, or use Meta AI to recognize and label real-world objects.

Even if the user’s gaze shifts away from these windows, the virtual projections remain in place, ready to reappear when the user’s attention returns.

As for image and text clarity, it’s good enough for viewing photos and reading text, but you probably won’t want to use these glasses to watch movies—at least not yet.

Meta’s expertise in social networking also creates new and exciting possibilities with AR glasses. When on a call with a friend, their holographic projection appears right in front of you, though the current version is still somewhat rough.

Meta Orion also includes an inward-facing camera that scans the wearer’s face to create a real-time avatar for video calls with mobile users.

In terms of interaction, Meta Orion supports eye-tracking, hand tracking, and AI voice commands. When paired with the wristband, users can perform more precise hand gestures.

The wristband recognizes several hand gestures: pinching your thumb and index finger selects content, while pinching your middle finger and thumb calls up or hides the app launcher. If you press your thumb against your clenched fist, it allows you to scroll up or down, mimicking the gesture of flipping a coin. Overall, the interactions feel natural.

It’s also worth mentioning that the wristband offers haptic feedback, ensuring you know when your gesture has been successfully recognized—this addresses a major issue that many mixed-reality (MR) devices currently face.

The wristband works by reading electromyography (EMG) signals related to hand gestures. The Verge even likened it to “mind-reading.”

With this wristband, you can use your eyes as a pointer for the Orion interface and pinch to select. The overall experience is intuitive. More importantly, in public, you won’t need to awkwardly wave your hands in the air to issue commands. With your hands in your pockets, you can still control the AR glasses naturally.

Meta AI, which has already made an impact with the Ray-Ban glasses, takes things a step further with its integration into AR. Now, it can interact more deeply with the real world.

During a live demo, The Verge used Orion to quickly identify and label ingredients on a table, and then asked Meta AI to generate a smoothie recipe using those ingredients.

Although Mark Zuckerberg emphasized that Meta Orion is designed for consumers, the current version is still a prototype, available only to selected developers and testers. Among those testing it is NVIDIA’s CEO, Jensen Huang.

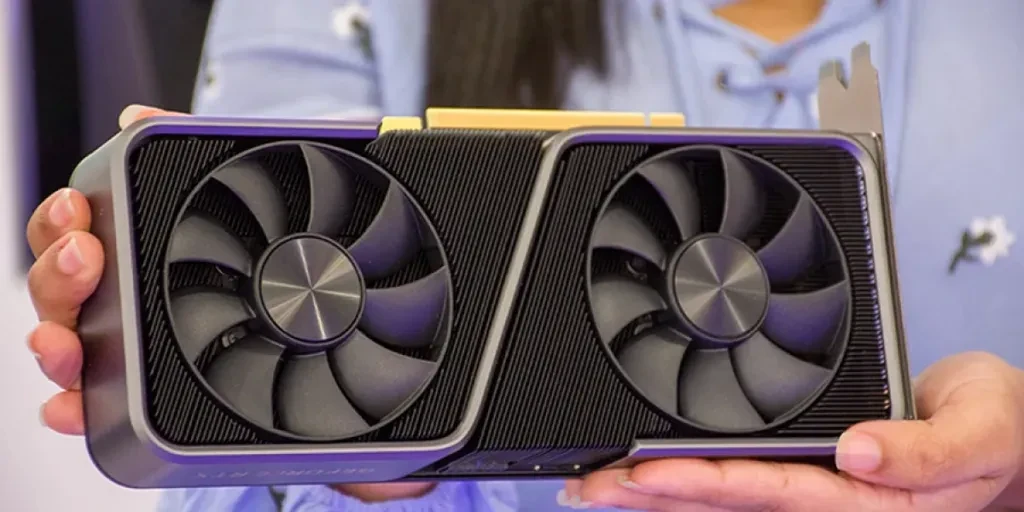

Meta Quest 3S: A Lite Version of Vision Pro?

Zuckerberg announced the price of the Quest 3S within ten seconds of taking the stage, which is remarkably rare for electronic product launches. I must say, this direct, get-to-the-point approach feels really great.

In short, the Meta Quest 3S is essentially a “lite version” of the Quest 3. The base model with 128GB starts at $299.99, while the 256GB version is priced at $399.99.

As for specs, the Quest 3S is powered by the Snapdragon XR2 Gen 2 processor, the same as the one in the Quest 3, and it also supports hand tracking.

After watching the presentation, it felt like the name “Quest 3S” could have been more fittingly called “Quest 2 Plus.” Reports suggest that, as a more budget-friendly option, the Quest 3S uses the same lenses as the Quest 2 and has a thicker body compared to the Quest 3.

Though the hardware specs of the Quest 3S don’t match the Quest 3, the software experience is virtually the same. Like its more expensive counterpart, the Quest 3S runs on HorizonOS, offering a wide range of entertainment and productivity features.

When the Quest 3 launched last year, reviews were mixed. The biggest criticisms involved significant lag and distortion when using video passthrough mode. Zuckerberg announced that after a year of optimization, the experience has seen major improvements, particularly in three key areas: VR functionality, hand tracking, and software compatibility.

Users can now transform 2D webpages into an immersive workspace, just like with Vision Pro, and arrange multiple open windows anywhere in virtual space.

Additionally, the Quest 3S’s theater mode can expand a single screen into a massive virtual theater, filling the entire space. While immersive viewing on headsets is not a new concept, the key difference lies in how many platforms and media sources are supported, which will define the product’s experience.

Quest 3S supports major Western media platforms like Netflix, YouTube, and Amazon Prime, all of which can be used in theater mode.

Movies and games are essential entertainment features for any VR headset, but the additional functionalities often highlight the product’s capabilities. One of the new features revealed at the event is Hyperscale on Quest 3S.

Using your phone, you can scan the room’s layout, including the furniture, and then recreate a nearly 1:1 model of the room within Quest 3S.

From the demonstration shown at the event, the recreated scene had impressive fidelity, with minimal jagged edges or distortions. This technology could potentially be used for immersive virtual tours of houses, museums, or landmarks, without the need to leave your home.

However, beyond this, the practicality of scene recreation and how it can be applied in different regions remains a question that Meta and Quest need to answer in the future.

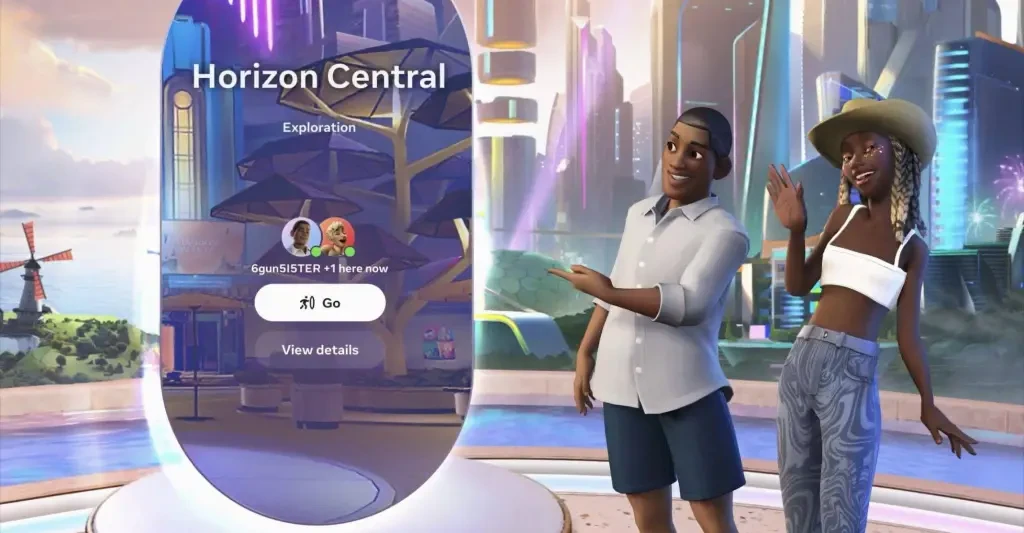

Meta, short for “metaverse,” was rebranded from Facebook to emphasize Mark Zuckerberg’s commitment to new technology. Meta is one of the first tech giants to aggressively pursue the metaverse and the ‘metaverse’ has become an essential topic at every Meta product launch, despite facing numerous challenges along the way.

At the event, Zuckerberg introduced new enhancements to immersive social features. Quest users can now create virtual avatars and team up to play games, work out, or attend a virtual concert together.

With the release of the Quest 3S, Meta also announced that Quest 2/Pro will be discontinued, and the Quest 3’s price has been lowered from $649 to $499. The new products will be available for shipping after October 15th.

Llama 3.2 Launch: Multimodal Capabilities Bring AR Glasses to Life

Compared to the minimal changes of the Meta Quest 3S, the new features of Meta AI shine brighter. At the event, Llama 3.2, Meta’s latest large language model (LLM), took center stage with its impressive multimodal capabilities.

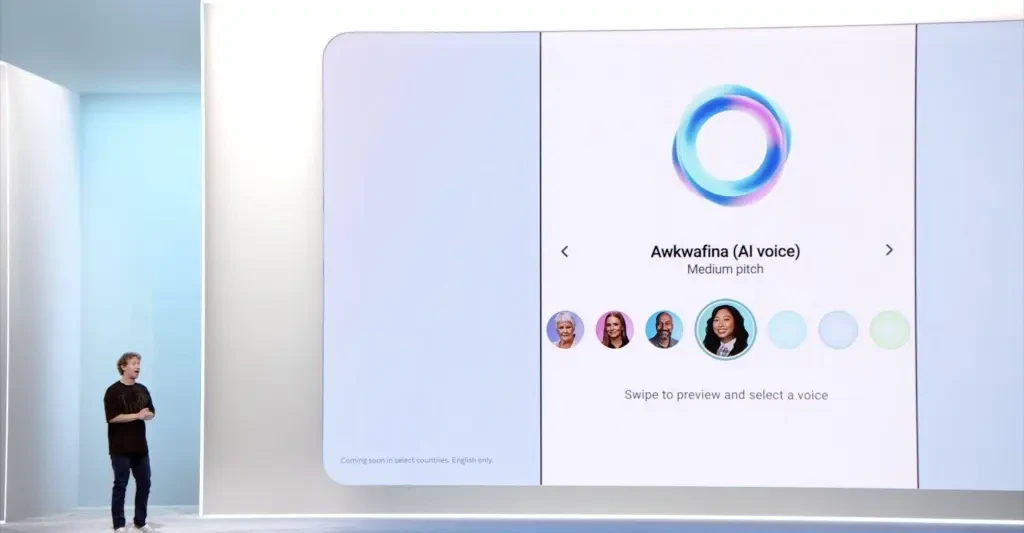

Mark Zuckerberg announced that Meta AI now includes a voice feature, allowing users to interact with it through Messenger, Facebook, WhatsApp, and Instagram by asking questions or chatting in other ways, receiving spoken replies.

Not only can users interact with Meta AI via voice, but they can also choose from a range of celebrity voices, including Judi Dench, John Cena, Awkwafina, and Kristen Bell.

Zuckerberg demonstrated this feature live on stage. Overall, the response time was fast, the answers were fairly accurate, and the voice intonation sounded even closer to human conversations. Users can even interrupt the conversation at any point to insert a new topic or question.

Though there were a few glitches during the demo, Zuckerberg openly acknowledged that this is expected with a demo of an evolving technology, and he emphasized that these are areas they’re working on improving.

In addition to the voice interaction, Meta AI’s AI Studio feature allows users to create their own AI characters tailored to their preferences, interests, and needs. These AI characters can help generate memes, offer travel advice, or simply engage in casual conversation.

However, based on the results, the upcoming AI translation feature appears to be more practical. Currently, all devices equipped with Meta AI support real-time voice translation. During the event, two presenters used Meta Ray-Ban glasses to conduct a real-time conversation between English and Spanish, showcasing the device’s ability to perform live translations.

Meta Ray-Ban captures the speech through its microphone and quickly translates it into the wearer’s native language. Although the translation is relatively fast, longer sentences can cause brief pauses, which makes the conversation feel slightly awkward. In some cases, the AI even interrupts the speaker mid-sentence.

Another feature, the translation of video audio, takes it a step further. Compared to the real-time translation mentioned earlier, this could be called an “advanced translation feature.” Meta AI is capable of translating the audio in online videos into another language, and impressively, it can perfectly preserve the speaker’s original tone, vocal characteristics, and even accents from different countries when speaking English.

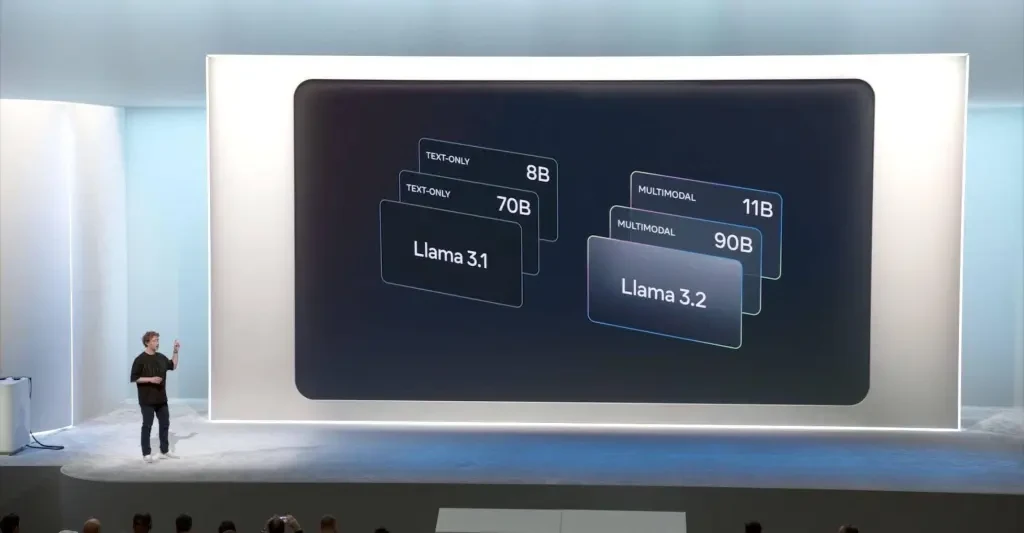

Meta AI’s series of updates at the event all share a common driving force: Llama 3.2.

In July of this year, Meta had just introduced the Llama 3.1 model, which was a breakthrough, boasting 405 billion parameters. It was the most powerful open-source model Meta had developed, and one of the strongest globally.

Surprisingly, in just two months, Llama 3.2 has arrived.

Llama 3.2 is Meta’s first open-source AI model with multimodal capabilities, meaning it can process both text and images simultaneously. It comes in two visual models (11B and 90B parameters) and two lightweight text-only models (1B and 3B). The lightweight models can process user inputs locally without needing to rely on external servers.

With the new capabilities of Llama 3.2, many devices powered by large models have expanded into more practical scenarios. Beyond the AI translation feature mentioned earlier, Meta Ray-Ban, now equipped with visual capabilities, can assist users more deeply in their daily tasks and routines.

For example, users can ask Meta Ray-Ban what kind of drinks can be made with an avocado, and there’s no need to explicitly mention “avocado” in the sentence—just using a pronoun like “this” works, as the glasses can see the object.

Many people have experienced forgetting where they parked their car at the mall. For those who frequently forget parking spot numbers, Meta Ray-Ban can now help by saving that information, so it’s easily retrievable when needed.

From calling a number on a poster, scanning a QR code on a brochure, to helping with daily outfit choices—the camera in the glasses, combined with the newly upgraded visual AI, allows Meta Ray-Ban to integrate seamlessly into every corner of daily life.

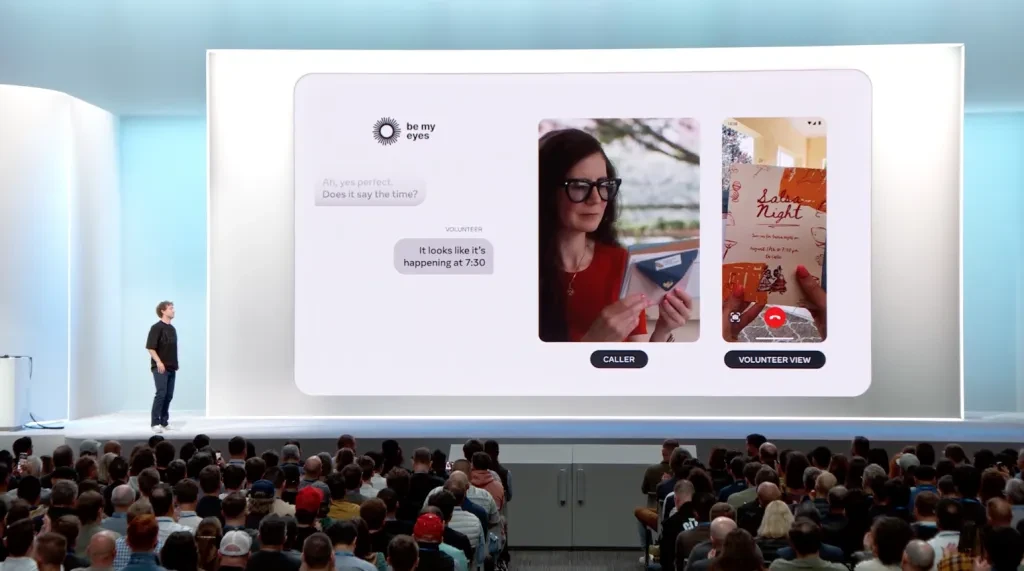

Thanks to this camera, the collaboration between Be My Eyes and Meta has become possible. Be My Eyes is a nonprofit platform that connects visually impaired individuals with sighted volunteers and companies through real-time video and AI. Volunteers or organizations can assist users in solving real-time issues via live video calls.

These technological advancements not only enhance the lives of the majority but also fill significant gaps in services for special groups, providing convenience for everyone. This is where the true meaning of technology begins to unfold.

The Dawn of the Next Generation of Computing Devices

Although there were already expectations for Meta’s Orion glasses, when Mark Zuckerberg finally revealed the real product, it brought back a sense of amazement that has been missing from tech innovations for a long time.

This amazement wasn’t just due to Meta’s compelling vision of the future, but also because the product’s real-world performance is remarkably close to that vision. The Verge provided a sharp assessment after trying it: Orion is neither a distant mirage nor a fully completed product; it exists somewhere in between.

This is what sets Orion apart from many of Meta’s previous explorations into futuristic concepts: it’s not just a prototype stuck in the lab. Instead, Meta has gone “all in” on this next-generation device, perfectly blending AI and mixed reality (MR).

Orion is an ultimate AI device: it can see what the user sees, hear what the user hears, and combine this with the user’s real-world context to deliver more effective responses.

It is also an ultimate interaction and communication tool: no longer limited by small screens or bulky headsets, Orion allows virtual and real worlds to merge seamlessly, offering interaction anywhere and anytime.

It’s widely acknowledged in the industry that the smartphone as a computing device is nearing the end of its lifecycle. Companies like Apple, Meta, and even OpenAI are exploring what comes next.

Although Meta Orion is still in its prototype stage, the product looks quite promising so far. Whether it becomes the next mainstream smart device after smartphones remains to be seen, but Zuckerberg is confident about its potential.

Glasses and holograms are expected to become ubiquitous products. If the billions of people who currently wear glasses can be transitioned to AI and mixed reality (MR) glasses, it could become one of the most successful products in world history—and, according to Zuckerberg, the potential for it to achieve even greater success is likely.

Source from ifanr

Written by Fanbo Xiao

Disclaimer: The information set forth above is provided by ifanr.com, independently of Alibaba.com. Alibaba.com makes no representation and warranties as to the quality and reliability of the seller and products. Alibaba.com expressly disclaims any liability for breaches pertaining to the copyright of content.