Owning a vehicle with advanced features you don’t quite understand can be a stressful experience. Can an AI assistant help?

Voice assistant technology is no new concept to many. From SIRI to Alexa, most of us would have had an encounter with AI-based technology in this form. However, in-vehicle voice assistants are an application which may be new to some, allowing in-vehicle voice assistance that results in a hands-free solution to deliver a variety of information inside the vehicle cabin.

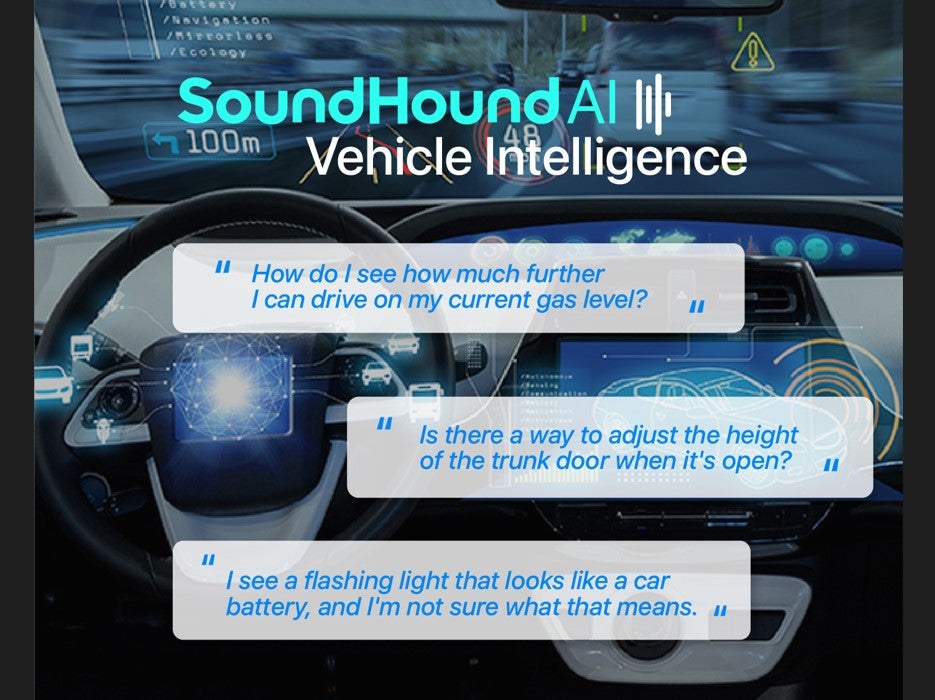

Audio and speech recognition company Soundhound says it was the first to offer an in-vehicle voice assistant that combines Generative AI with an established voice assistant. Recently, the company announced further developments in the technology, allowing drivers to have access to vehicle handbook information in a simplified, easy way.

We spoke to Michael Zagorsek, COO SoundHound, to discuss the new features and what they can achieve, as well as consider the future for this technology.

Just Auto (JA): Who is SoundHound and what does the company do?

Michael Zagorsek (MZ): We consider ourselves a leading independent provider of voice AI technology for automotive. In essence, what we do is we provide OEMs with a white labelled voice assistant so that drivers or passengers can interact with the vehicle information inside and outside the car with just their voice.

We started developing our technology back in 2005. We launched it in 2015 alongside a lot of what Amazon and Google were doing. The key difference is that the big tech providers were extending their voice services into the car, whereas what we were doing is augmenting the vehicle and therefore the OEM brands’ own capabilities and strengths.

We could ultimately do a lot of filtering and follow-ups for automotive applications, even in ways that big tech providers couldn’t. The key difference is that it’s not just putting a voice interface on something like ChatGPT; we have all of our domains: weather, navigation, points of interest – which are real-time, that can be coupled with something like ChatGPT or any other large language models. We believe those two things together (software engineering with machine learning) create the most robust assistant, and that’s something that we launched last year.

We are predominant in Hyundai brands and in multiple markets in Europe. We are a deep strategic partnership with Stellantis and their 20 brands, as well as Togg, a Turkish automotive manufacturer, and we’re talking to several other OEMs.

Could you discus the new generative AI feature which has recently been launched?

One of the core elements of what we offer is this idea that you can access information outside the vehicle and inside the vehicle. The car manual itself has always been a challenge for automakers. It’s obviously thick and very comprehensive; finding things is obviously a challenge for all. This is one of those ‘pain points’ that everybody who owns a car has.

One of the core elements of what we offer is this idea that you can access information outside the vehicle and inside the vehicle.

What we’re able to do is ingest that and then using our combination of software engineering and large language models, make that manual accessible with voice by using a proprietary mix of indexing and searching. The flexibility of large language models gives a lot of room for interpretation. People don’t have to know the name of the feature. They just say, for example: “What’s that feature if you’re on a hill so you won’t slide down?” The assistant determines that you’re talking about the hill-hold assist feature.

This really strengthens our value proposition. Essentially, we believe the voice experience should be an extension of the vehicle itself.

Secondly, as cars are becoming more software-centric, the idea of a printed manual becomes more obsolete because the software gets updated over the air (OTA) and there is obviously no up-to-date print version of that. More OEMs are going to have their manual available digitally within the infotainment system itself, but even that obviously presents challenges for access as you can imagine.

Will this technology be compatible with any vehicle?

Every vehicle has voice capable capability to a degree. I would say the legacy part of it is the embedded capability. This is prior to vehicles being connected to the Cloud or any services. They would have very limited functionality.

When we entered into the market, we started offering a Cloud capability for connected cars. What would happen is we would offer our capabilities through our platform on the vehicle and then through that we would make this vehicle intelligence feature available.

We’re not dogmatic about any one-way car companies should be implementing this. In some cases, if they want to continue using Amazon or Google, they certainly can. Having an independent proprietary voice assistant living alongside of that is, we feel, really an extension of their brand strategy.

We feel that having a more well-rounded and branded assistant is the better way to go that features this technology, but there are obviously multiple ways that can happen.

What do you predict to happen in this space over the next three years?

I would say that the latest innovations around these generative AI technologies have really woken people up to the possibilities of truly conversational AI.

This works for cars as much as it does smart speakers or anything that’s voice-enabled. ChatGPT opens the door to a lot of use cases that that didn’t exist before. In-car people can say: “I’m travelling to this location; do you have any advice for me?” Once people start to realise that it can start meaningfully impacting their lives, we will see a lot more activity there.

I would say that the latest innovations around these generative AI technologies have really woken people up to the possibilities of truly conversational AI.

The other categories of things that people have flirted with, but it hasn’t manifested yet, are a little bit more along the lines of something we will call ‘emotional intelligence’. If I’m feeling a certain way, the voice assistant can recognise and respond accordingly. It is that notion of emotion detection. If I’m angry, is there an opportunity to manage that emotion through a response?

For example, right now when you ask AI for a joke, the text to speech is the same tone as if you were asking it to navigate to the nearest petrol station. I think there’s going to be a lot of innovation for the actual text to speech to modify its response based on the context of what it’s saying. I think that will really unlock more of that sense that you’re having a conversation with something that seems a bit more intelligent than a robot that just takes commands.

Also, speech identification and voice identification – the technology exists, but it hasn’t manifested in OEMs. So, imagine if you enter your vehicle and say hello. Your vehicle recognises your voice and says “hi”. That certainly is within reach, I could see that happening within the next few years.

Lastly, but not least, monetization and commerce are very much on our roadmap. Part of our business is that we see voice-enabled services as well restaurants, food ordering, drive-throughs – lots of potential.

The idea is that you can order food or ask a business any questions through natural voice. Our strategy has always been to bring those services into the vehicle and make the car a much stronger gateway into the world around us. Within the next few years, we see that, and it’s interesting for OEMs because their revenue challenge prices are becoming tighter. EVs we know are not being sold at a profit, so additional forms of revenue are critical, and we believe that voice interaction can unlock some of that.

Is there anything else that you think people should know about AI?

When people first envisioned a voice assistant, I would say the early days – maybe seven to ten years ago – they saw the technology and what it could potentially do in ptractice. They were happy with that, but their imaginations were also a lot more powerful. Science fiction movies were introducing concepts like Jarvis for Iron Man. There was always this gap between what people wished it could do and what it did.

I think the advancements are coming so quickly that the idea that you could have an assistant that can talk to you and is there for you is more within reach than ever before.

We are on the cusp of people realising they can really speak to their voice assistant versus just command it. I think once people break through into that behaviour, they’ll never go back. Once you’ve unlocked value with AI, it just becomes part of what you do and how you interact. The thought is that we’re really in a position to truly embrace that conversational voice assistant.

Source from Just Auto

Disclaimer: The information set forth above is provided by just-auto.com independently of Alibaba.com. Alibaba.com makes no representation and warranties as to the quality and reliability of the seller and products.